Social media users took to X to alert supporters of former President Donald Trump that Google appeared to be suppressing or altering various search results to favor his new opponent, Vice President Kamala Harris.

In one set of tweets and news stories, journalists discovered that Google’s autocomplete feature would not autocomplete statements related to the attempted assassination of Trump. In a statement, Google said that no “manual action taken on these predictions,” and that its systems include “protections” against autocomplete predictions “associated with political violence. We’re working on improvements to ensure our systems are more up to date.”

This suggests that Google has some sort of policy that suppresses recent instances of political violence in its autocomplete. Older examples of political violence were autocompleted, including other presidential assassination attempts, the shootings of Rep. Gabby Giffords and Steve Scalise, and the attempted assassination of Slovakian Prime Minister Robert Fico in May 2024.

So, while there is a potentially neutral explanation for the suppression in Trump’s case, the news spun up journalists and activists to search for more examples of potential bias in Google search.

And they found more. Posting on X, various users found multiple apparent cases of Google suppressing Trump. When searching for “President Donald Trump” autocomplete would not complete this search but would instead autocomplete President Donald Duck or Donald Regan, an apparent reference to former Treasury Secretary Donald Regan. All other presidents autocomplete normally. Strangely enough, searching for just “Donald Trump” would autocomplete in my searches.

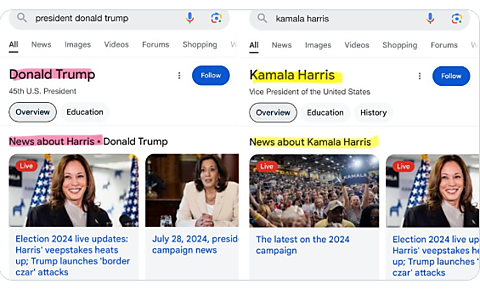

When users would actually search for Donald Trump, Google would not provide normal news about Trump. Instead, Google appeared to alter the search so it was searching for and showing news about Kamala Harris and Trump. Searching for Kamala Harris, however, would turn up normal search results only focused on news about Harris.

While it is possible that Google has a policy against autocompleting very recent cases of political violence, it is harder to explain why President Donald Trump did not autocomplete the way it did for every other president. Similarly, there does not appear to be as clear a reason for adding Kamala Harris to searches focused only on Donald Trump.

It is possible that this is some glitch or oversight—after all, tech platforms are massively complicated combinations of automated systems and policies. The sheer number of mistakes or glitches, however, starts to strain credulity.

But mistakes do happen, as seen by another recent case of apparent bias in which Meta began to label and demote the famous picture of Donald Trump pumping his fist after the assassination attempt as misinformation. This was a mistake as Meta’s fact-checking program had identified an altered version of the photo in which the Secret Service agents were photoshopped to look as though they were smiling. The fact check should have only applied to that photoshopped photo but the automated enforcement systems accidentally enforced on the original, real photo too. But the timing could not be worse as users were already looking for instances of tech bias.

Now, if Google’s search misfires were not mere mistakes, it is possible that some rogue engineers are altering search functionality without the approval of Google HQ. It is also possible that Google has implemented policies that directly or indirectly suppress various searches related to Donald Trump.

No one other than Google knows exactly what is going on, and it is not a good look. Even as I write this blog, some of these examples appear to be fixed or changed. In the aftermath of Google Gemini’s high-profile failure, in which the new AI refused to create many images of Caucasians, the appearance of bias has led to continued political accusations against Google.

Former President Trump accused Meta and Google of censoring him, saying “GO AFTER META AND GOOGLE.” Elon Musk posted several times on X to call attention to these search results, and to accuse Google of a “massive amount of hidden censorship.” Rep. Chip Roy (R‑TX) wrote on X that he had verified the “censoring” of the Trump assassination. Donald Trump Jr. took to X to accuse Google of election interference to help Kamala Harris. Senator Roger Marshall (R‑KS) said he would be launching an “official inquiry” into Google’s “suppressing” of searches into Trump.

While at least some of the apparent bias may be due to neutral policies against recommending searches about recent political violence or mistakes made by automated technologies, it is possible that bias is at work in other instances. But it is important to realize that tech companies, like TV stations, journalists, bookstores, and other businesses have the right to be biased. They can use their products to advance speech they agree with and suppress speech they disagree with.

Yet doing so can also be dangerous for their business. If an organization built on connecting people with other people and information is seen as suppressing certain types of political content, then users will find alternatives. Indeed, there are many alternative search engines that are available that will appeal to different user interests, including promises of enhanced privacy or anonymity, supporting the environment, or focusing on music and imagery.

If Google is biased here, then it is worth noting that users have another tech platform, X, to thank for creating a space where users, journalists, billionaires, and politicians alike could connect and share this information.

So, before we rush to demand the government regulate or punish tech companies for their potentially biased choices, remember that the robust market of technology services is already working to deliver users more choices and better products.