Meta’s Oversight Board, an organization that has the authority to review Meta’s decisions and take down or leave up pieces of content, recently affirmed that Meta should leave up a post by a far‐right French politician Éric Zemmour that condemns African immigration as it “colonizes Europe.”

While many might reject that sort of rhetoric as a dog whistle to more racist tropes and beliefs, it is also true that immigration is a major political issue of contention around the world. Indeed, Zemmour received 7 percent in the first round of voting for the French presidency.

While Zemmour’s views are abhorrent, the challenge in this case is whether strident anti‐immigrant speech, even regarding potential conspiracy theories, causes enough harm on Meta’s platforms to justify the suppression of core political speech.

For those who care about free expression, the board’s decision to allow this content is worth celebrating. Removing offensive speech that is not closely linked to objective and imminent harm may be counterproductive because it fails to address the underlying problems. Europe already has aggressive and growing layers of hate speech laws—the politician in this case has previously been convicted for hate speech multiple times.

And yet, “hate speech” and those accused of being its greatest purveyors in Europe have only grown in popularity. Rather than stopping “hate speech” and protecting the vulnerable, Europe’s censorial policies are emboldening these speakers.

Suppressing speech, whether it is censorship by governments or social media companies removing content and thus exercising their editorial control over their platforms, does not actually help address the root causes of “hate speech.” Meta is a private company that can create its own standards, and if it wants to remove all speech that it finds objectionable, that is its right.

But through its Oversight Board, Meta hopes to find the right standards and norms for when it should remove content that is too harmful and when it should allow content that is offensive and objectionable.

In this case, though, while the board decided to allow the content, it did not uniformly assert the importance of societies having uncomfortable discussions about big political and social issues.

The board took a new step, which was to issue a substantial minority opinion that argued this content was too harmful and should have been removed. While the board, to date, has included minority views in its decisions, they have always been limited to a couple of paragraphs at most. In this decision, not only is a minority opinion given substantial space, but it is longer than the majority opinion. As a result, the decision reads more as a justification for removing speech about immigration that refers to colonizing, invading, or replacement.

On the one hand, it is worthwhile to see a more vigorous minority opinion and debate within Oversight Board decisions. Deliberative bodies benefit from a robust and public debate over key issues, including documenting disagreements within their decisions.

On the other hand, it is concerning to see that a strong minority of the board (strong enough to have large control over the drafting of the final decision) leaned into what is, in essence, a bad tendency test (i.e., a doctrine of speech abandoned decades ago by US courts that holds that speech can be suppressed if the tendency of a given piece of speech could be some harmful outcome). Such a standard was widely used to convict anti‐war advocates in World War I as seditious under the Espionage Act of 1917 for questioning the rationale of joining the war. In a striking resemblance to this Oversight Board case, the bad tendency test was used to justify the conviction of Socialist Party leader Eugene Debs, who received 6 percent of the US presidential vote in 1912, for criticizing the draft during wartime.

In this case, the minority of the board felt that Zemmour’s content criticizing immigration or immigrants should be removed because the cumulative impact “contributes to the creation of an atmosphere of exclusion and intimidation.” Seeing as World War I activists’ peaceful speeches or pamphlets were viewed as contributing to an atmosphere of sedition with the “tendency of the article to weaken zeal and patriotism,” such a rationale is ripe for abuse and inconsistent application.

Take the similar use of the term “colonize” in the context of the Israel/Palestine conflict. One of the key assertions of the Palestinian side is that the root cause of the ongoing conflict is Israel being a “colonial project.” But certainly the Board, as well as most fair‐minded people, would not want to suppress such arguments. After all, it would significantly silence one side of a major geopolitical debate.

The inevitably inconsistent application of such rules interjects the bias of policymakers into whose speech is good and whose is bad. And while these pronouncements may seem fine when they target disfavored views, these same laws can, have, and will continue to be weaponized in profoundly unjust ways.

In the slaveholding South, for instance, the bad tendency test was used to outlaw abolitionist speech as “the unavoidable consequences of [abolitionist] sentiments is to stir up discontent, hatred, and sedition among the slaves.”

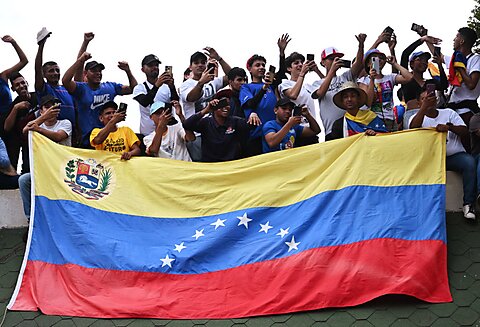

Today, the German Network Enforcement Act (commonly known as NetzDG) was supposed to stop online hate speech but has also been adopted by over a dozen other nations, many of which are authoritarian regimes like Russia, Belarus, Vietnam, and Venezuela. Such nations are happy to use this German law as a justification for silencing any “hateful” online dissent.

If we want to better tackle hate speech and protect minorities, we must not reflexively reach for the tools of suppression. In this case, merely silencing Zemmour will not defeat the ideas that animate him and many others. It is better for all to know the views of this person who wants to be a political leader and for civil society to address his assertions and beliefs with counter‐speech. Only then can the fears, anger, and views that animate such beliefs be effectively addressed both online and offline.